The responsibilities of the CIO span a spectrum of managerial tasks, with one end of the spectrum as "supply" - the delivery of IT resources and services to support business functions - and the other end of the spectrum as "demand" -the task of helping the business innovate through its use of technology. Many CIOs admit that balancing both demand and supply is a difficult task. Fortunately, the CIO has a range of new opportunities and tools to help him manage and order these competing priorities. The process starts with an understanding of how new sourcing models can liberate internal resources and funding for strategic business enablement and innovation.

While every CIO plans aligning IT and business strategy, the irony is that they don't have enough time for effective strategic planning. Usually they blame it on demand-side pressures.Look at the challenges confronting the CIO:

The business side complains that their CIOs aren't up to speed on issues confronting the business and can't think through the implications of systems trade-offs, on a business-unit level, for planned implementations or proposed IT investments. At the same time, the business side usually gets confused in making assessments of the relevance of new technologies to safeguard their business competitiveness. More often than not, business leaders say that their CIOs are not proactively bringing them new ideas about how technology can help them compete more effectively.

Part of the problem stems from the inherent conflict of managing supply and shaping demand. CIOs often must meet requirements to reduce total IT spending, for instance, while making investments to support future scenarios-even though these upgrades will increase IT operating costs. It's indeed a tough job - trying to be both a cost cutter and an innovator - and the CIO sometimes compromises one role. Structural issues whereby parts of the organization are under the control of other executives also complicate the job. Business-unit leaders want more IT leadership, but they are wary of CIOs who don't tread carefully along business leaders' boundaries. Strategic IT management calls for making improvements on the demand side. Managing the demand side of the equation broadly covers:

- The financial understanding of costs and benefits,

- Business accountability for IT and

- Clear framework for investments in technologies.

CIOs shift their attention to different aspects of these three core components. As part of the evolution the CIOs shift focus: once operations are stabilized and business credibility has been achieved, emphasis shifts toward working more closely with business leading to opportunities to contribute to strategic initiatives and direction.

In practice, it can be seen that CIOs who meet and exceed business expectations get rewarded with greater participation in their enterprise's business strategy, higher budgets and become favorites with the business side. In most cases, these CIOs tend to have the ear of the CEO through a direct reporting relationship. CIOs need to know not only what the differences are but also how to time the shift; move too soon or too late and credibility with business leaders will suffer.

This month IBM released its findings from the new global study of more than 2,500 chief information officers (CIOs), covering 19 industruesindustries and spread across 78 countries. The study confirms the strategic role played by CIO’s in making their business become visionary leaders of innovation and financial growth. Many CIO’s are getting much more actively engaged in setting strategy, enabling flexibility and change, and solving business problems, not just IT problems

The report replete with innumerable insights is an excellent collection and I started by looking at understanding some themes and associated metrics that preoccupy the CIO’s the most . I was startled to find that more and more CIO’s appear to be genuinely focusing on getting the growth lever of IT and business fire by rightly turning their attention in increased measures towards innovation. Someone quips overtime the role of the CIO is less and less about technology and more and more about strategy. Really hitting the nail on the head. As the role of the CIO itself transforms so do the types of projects they lead across their enterprises, which will allow CIOs to focus less time and resources on running internal infrastructure, and more time on transformation to help their companies grow revenue. CIOs are transforming their infrastructure to focus more on innovation and business value, rather than simply running IT. The report finds that today’s CIOs spend an impressive 55 percent of their time on activities that spur innovation. These activities include generating buy-in for innovative plans, implementing new technologies and managing non-technology business issues. The remaining 45 percent is spent on essential, more traditional CIO tasks related to managing the ongoing technology environment. This includes reducing IT costs, mitigating enterprise risks and leveraging automation to lower costs elsewhere in the business. Obviously not every CIO would make the cut. It’s reported that High-growth CIOs actively integrate business and IT across the organization 94 percent more often than Low-growth CIOs. The study notes that CIOs spend about 20 percent of their time creating and generating buy-in for innovative plans. But High-growth CIOs do certain things more often than Low-growth CIOs: they co-create innovation with the business, proactively suggest better ways to use data and encourage innovation through awards and recognition. 56 percent of High-growth CIOs use third-party business or IT services, versus 46 percent of Low-growth CIOs. The study also found that High-growth CIOs actively use collaboration and partnering technology within the IT organization 60 percent more often than Low-growth CIOs. Even more impressive, High-growth CIOs used such technology for the entire organization 86 percent more often than Low-growth CIOs

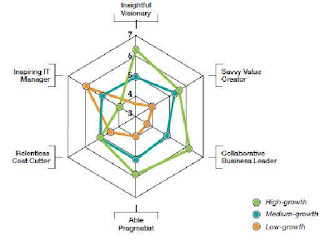

Successful CIO’s , the report notes actually blend three pairs of roles. At any given time, a CIO is:

• An Insightful Visionary and an Able Pragmatist

• A Savvy Value Creator and a Relentless Cost Cutter

• A Collaborative Business Leader and an Inspiring IT Manager

Adjusting the mix one pair at a time, the study reports make the CIO’s perform tasks that make innovation real, raise the ROI of IT and expand the business impact.

Other key findings of the survey:

• CIOs are continuing on the path to dramatically lower energy costs, with 78 percent undergoing or planning virtualization projects

• 76 percent of CIOs anticipate building a strongly centralized infrastructure in the next five years.

IBM's CIO Pat Toole has this to say about the findings. In addition to the detailed personal feedback, IBM also incorporated financial metrics and detailed statistical analysis into the findings.The report also highlights a number of recommendations from strategic business actions and use of key technologies that IBM has identified that CIOs can implement, based on CIO feedback from the study.

(Picture Courtesy :IBM)